The open public consultation was intended to gather general views on the scope of the problem and its impact on society, on online businesses and national authorities; on the effectiveness of current measures to tackle illegal content, and on the stakeholder’s preferences for possible additional measures and their impact. The consultation was also intended to explore, in particular with online hosting service providers and national authorities, the take-up of the guidelines proposed by the Commission in the Recommendation on further measures to tackle illegal content online (C(2018) 1177 final).

The public consultation was complemented by other consultation tools, including a Flash Eurobarometer targeting individuals.

Who replied?

The public consultation received a total of 8,961 replies, of which 8,749 are from individuals, 172 from organisations, 10 from public administrations, and 30 from other categories of respondents.

The questionnaire was tailored for each category of respondents and disseminated through the Commission’s website, newsletter, advertisements on main social media channels to citizens and representatives of hosting service providers and civil rights associations, as well as throughout the targeted consultations organised in parallel.

Individuals

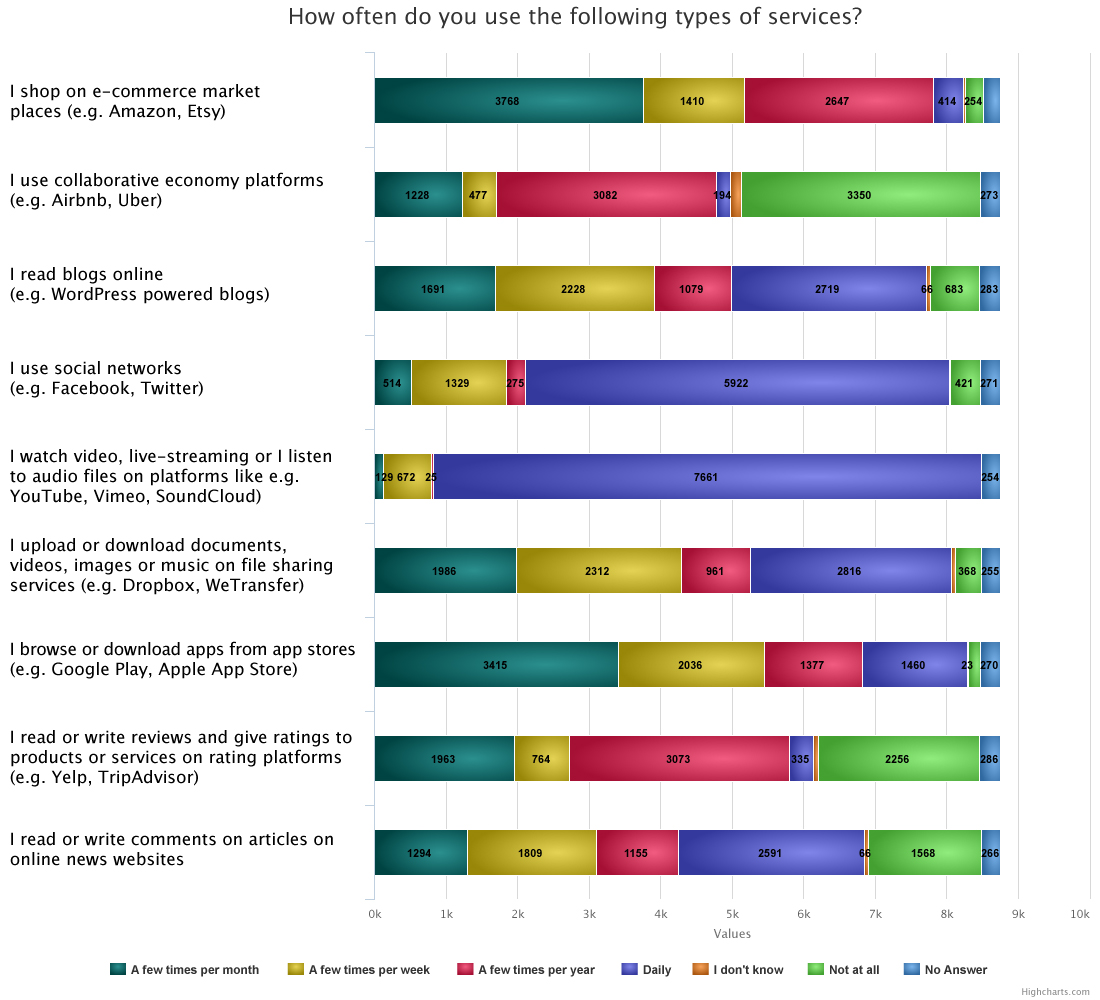

The sample of respondents were very active users of online platforms and other types of hosting services. The most intensely used services were audio/audiovisual media sharing platforms, e-commerce market places, file sharing and transfer services, social media platforms, or blog-hosting platforms.

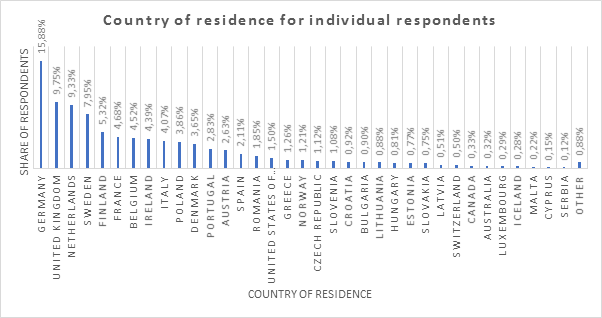

Most responses were received from Germany (16%), followed by the United Kingdom and the Netherlands (just under 10%), Sweden (8%), and Finland (just under 6%). Less than 5% of responses came from 35 non-EU countries, the highest number of replies coming from the United States, Norway and Canada. Detailed country statistics are presented in the graphic below.

Organisations

Organisations responded to a specifically designed questionnaire, corresponding to different profiles, as follows:

- 18 hosting service providers and 4 major business associations replying on behalf of online HSPs, including one association representing specifically start-ups.

- 10 competent authorities, including law enforcement authorities, Internet referral units, ministries or consumer protection authorities.

- 39 replies were submitted by not-for-profit organisations identifying and reporting allegedly illegal content online, including 3 from non-EU countries. 21 of the respondents reported to be “trusted flaggers” with a privileged status given by HSPs.

- 23 for-profit organisations identifying and reporting allegedly illegal content and 10 organisations or business representing victims (mostly concerning IPR infringements)

- 46 replies from other industry organisations,

- 30 replies from other types of respondents, including international organisations, political parties and other civil rights advocates.

- 21 organisations representing civil rights interests

- 11 research or academic organisations.

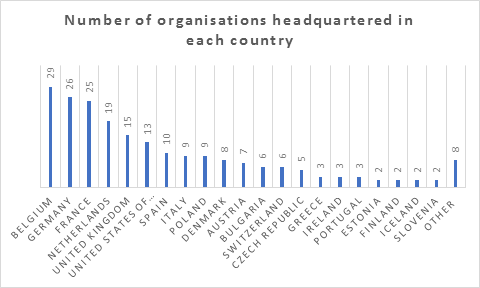

29 of the organisations were headquartered in Belgium, 26 in Germany, 25 in France, 19 in the Netherlands, and 15 in the United Kingdom. The graph below gives a more complete list of countries.

The hosting services replying to the consultation covered cloud services, video, audio and image sharing, e-commerce market places, file sharing, social networks, app distribution, video streaming, rating and reviews, and collaborative economy services. 14 are established at least in one EU Member State, whereas 4 are not established in the EU. 7 reported to be micro-enterprises, 4 small and medium sized, and 7 large enterprises.

Preliminary overview of the replies

Individuals

- Individuals were the largest category of respondents, with 8,749 replies.

- Over 75% of individuals considered that the Internet is safe for its users, and 70% reported never to have been a victim of any illegal activity online.

- 33% of the individual respondents reported to have seen allegedly illegal content and have reported it to the hosting service; over 83% of them found the procedure easy to follow.

- The overwhelming majority of respondents to the open public consultation said it was important to protect free speech online (90% strongly agreed), and nearly 18% thought it important to take further measures against the spread of illegal content online.

- 30% of the respondents whose content was wrongly removed (self-reported) had issued a counter-notice to contest the removal of their content.

- 64% of the respondents whose content was removed found both the content removal process, and the process to dispute removal as lacking in transparency. Nearly half of the individuals who had flagged a piece of content did not receive any information from the service regarding the notice, while one third reported to have been informed about the follow-up given to the notice.

- One fifth of the respondents who had their content removed from hosting services (450 out of 2000 respondents) reported not to have been informed about the grounds for removal at all.

- Half of the respondents considered that hosting services should remove content notified by law enforcement authorities immediately. Half of the respondents opposed fast removal for content flagged by organisations with expertise (trusted flaggers), other than law enforcement.

Organisations

The response rate for each type of organisation does not allow for a meaningful quantitative analysis of the replies.

- Hosting service providers replying to the consultation were generally open to cooperation, including with government agencies or law enforcement flagging illegal content. While larger companies reported using proactive measures (besides notice and action systems), including content moderation by staff, automated filters and, in some cases, other automatic tools to flag identified content to human reviewers, responses also showed that smaller companies are more limited in terms of capability. Overall, hosting service providers did not support additional regulatory intervention of a horizontal nature but supported, to a certain degree, voluntary measures and cooperation.

- Associations representing large numbers of hosting service providers considered that were legal instruments to be envisaged at EU level, these should be problem-specific and targeted. They broadly supported harmonisation of notification information, but expressed concerns as to the feasibility of establishing strict time limits for takedown of content from the time content is uploaded, pointing to overly burdensome processes especially for SMEs, and to general incentives which would lead to over-removal of legal content

- Public authorities reported their difficulty in identifying the sources of illegal content online and therefore the lack of evidence for any related judicial action. The time for removing illegal content was also considered as a critical point. Some respondents said that a clear and precise legislative framework was needed which would take into account the different actors that operate in the EU; others emphasised the importance of having strong and effective cross-border cooperation between national regulatory authorities.

- Amongst the organisations – for profit and non-for-profit – reporting allegedly illegal content to hosting services, 26 were mainly concerned with copyright infringements, 5 with child sexual abuse material and 3 with illegal commercial practices online. Respondents emphasised the need for swift and user-friendly collaboration with hosting services. Some underlined the usefulness of using automated tools, and some flagged the need for the tools to be reliable and combined with human verification. Amongst the respondents, 21 declared the setting of time limits for processing referrals and notifications from trusted flaggers as being important to support cooperation between hosting service providers and trusted flaggers.

- Civil society organisations representing civil rights expressed concerns about the impact which proactive measures or new legislative measures may have on freedom of expression and information. Respondents agreed with reinforcing public authorities’ capabilities to fight illegal content online. Several civil society organisations emphasised the need to focus also on prosecuting providers of illegal content.

-

Intellectual property rights owners and their associations participated via different respondent groups in the public consultation. They include publishers, film and music labels, media and sports companies, as well as trademark owners. They generally supported a stronger responsibility for hosting service providers and proposed establishing “stay-down” obligations features in individual submissions of infringing content.

- Researchers and academic organisations highlighted the complexity and the delicate balance in dealing with illegal content online. They generally considered that different kinds of illegal content needed different frameworks. They pointed to the lack of real incentives (if not sporadic media attention) companies might have to deal with counter-notices, whereas non-treatment of notices can more easily lead to legal consequences. They also underlined that existing counter-notice procedures are by and large underused, and results are not transparently reported. Academics also expressed concerns about the performance of automated tools and underlined the need for safeguards to fundamental rights and for transparency to the process of detecting and removing alleged illegal content online.

This report should be regarded solely as a summary of the contributions made by stakeholders on the "Measures to further improve the effectiveness of the fight against illegal content online". It cannot in any circumstances be regarded as the official position of the Commission or its services.

Download the summary report (.pdf format)

Detailed contributions

- Answers of individuals

- Answers of organisations

- Documents attached to the survey: anonymised (as asked for by the respondant in the survey) and non-anonymised.